remove obsolete code

most of the scripts were used for Phabricator integration that is now disabled and current scripts are located in `llvm-project/.ci` . Also removed obsolete playbooks and scripts that were not used and likely no longer work. Updated wording and minor typos. I have tried to look through potential references to the scripts and disabled some of buildkite pipelines that referred to them. That is not a guarantee that this will not break anything as I have not tried to rebuild containers and redeploy cluster.

This commit is contained in:

parent

2d073b0307

commit

7f0564d978

114 changed files with 3 additions and 9405 deletions

87

README.md

87

README.md

|

|

@ -1,6 +1,6 @@

|

||||||

This repo is holding VM configurations for machine cluster and scripts to run pre-merge tests triggered by http://reviews.llvm.org.

|

This repo is holding VM configurations for machine cluster and scripts to run pre-merge tests triggered by http://reviews.llvm.org.

|

||||||

|

|

||||||

As LLVM project has moved to Pull Requests and Phabricator will no longer trigger builds, this repository will likely be gone.

|

As LLVM project has moved to Pull Requests and Phabricator no longer triggers builds, this repository will likely be gone.

|

||||||

|

|

||||||

[Pull request migration schedule](https://discourse.llvm.org/t/pull-request-migration-schedule/71595).

|

[Pull request migration schedule](https://discourse.llvm.org/t/pull-request-migration-schedule/71595).

|

||||||

|

|

||||||

|

|

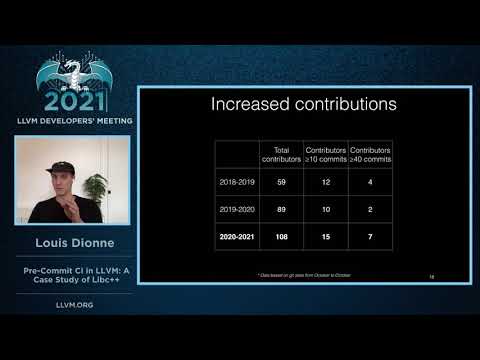

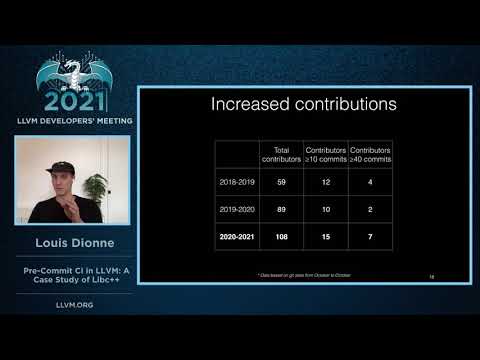

@ -10,100 +10,17 @@ Presentation by Louis Dione on LLVM devmtg 2021 https://youtu.be/B7gB6van7Bw

|

||||||

|

|

||||||

[](https://www.youtube.com/watch?v=B7gB6van7Bw)

|

[](https://www.youtube.com/watch?v=B7gB6van7Bw)

|

||||||

|

|

||||||

The *pre-merge checks* for the [LLVM project](http://llvm.org/) are a

|

|

||||||

[continuous integration

|

|

||||||

(CI)](https://en.wikipedia.org/wiki/Continuous_integration) workflow. The

|

|

||||||

workflow checks the patches the developers upload to the [LLVM

|

|

||||||

Phabricator](https://reviews.llvm.org) instance.

|

|

||||||

|

|

||||||

*Phabricator* (https://reviews.llvm.org) is the code review tool in the LLVM

|

|

||||||

project.

|

|

||||||

|

|

||||||

The workflow checks the patches before a user merges them to the main branch -

|

|

||||||

thus the term *pre-merge testing**. When a user uploads a patch to the LLVM

|

|

||||||

Phabricator, Phabricator triggers the checks and then displays the results.

|

|

||||||

|

|

||||||

The CI system checks the patches **before** a user merges them to the main

|

|

||||||

branch. This way bugs in a patch are contained during the code review stage and

|

|

||||||

do not pollute the main branch. The more bugs the CI system can catch during

|

|

||||||

the code review phase, the more stable and bug-free the main branch will

|

|

||||||

become. <sup>[citation needed]()</sup>

|

|

||||||

|

|

||||||

This repository contains the configurations and script to run pre-merge checks

|

|

||||||

for the LLVM project.

|

|

||||||

|

|

||||||

## Feedback

|

## Feedback

|

||||||

|

|

||||||

If you notice issues or have an idea on how to improve pre-merge checks, please

|

If you notice issues or have an idea on how to improve pre-merge checks, please

|

||||||

create a [new issue](https://github.com/google/llvm-premerge-checks/issues/new)

|

create a [new issue](https://github.com/google/llvm-premerge-checks/issues/new)

|

||||||

or give a :heart: to an existing one.

|

or give a :heart: to an existing one.

|

||||||

|

|

||||||

## Sign up for beta-test

|

|

||||||

|

|

||||||

To get the latest features and help us developing the project, sign up for the

|

|

||||||

pre-merge beta testing by adding yourself to the ["pre-merge beta testing"

|

|

||||||

project](https://reviews.llvm.org/project/members/78/) on Phabricator.

|

|

||||||

|

|

||||||

## Opt-out

|

|

||||||

|

|

||||||

In case you want to opt-out entirely of pre-merge testing, add yourself to the

|

|

||||||

[OPT OUT project](https://reviews.llvm.org/project/view/83/).

|

|

||||||

|

|

||||||

If you decide to opt-out, please let us know why, so we might be able to improve

|

|

||||||

in the future.

|

|

||||||

|

|

||||||

# Requirements

|

|

||||||

|

|

||||||

The builds are only triggered if the Revision in Phabricator is created/updated

|

|

||||||

via `arc diff`. If you update a Revision via the Web UI it will [not

|

|

||||||

trigger](https://secure.phabricator.com/Q447) a build.

|

|

||||||

|

|

||||||

To get a patch on Phabricator tested the build server must be able to apply the

|

|

||||||

patch to the checked out git repository. If you want to get your patch tested,

|

|

||||||

please make sure that either:

|

|

||||||

|

|

||||||

* You set a git hash as `sourceControlBaseRevision` in Phabricator which is

|

|

||||||

* available on the Github repository, **or** you define the dependencies of your

|

|

||||||

* patch in Phabricator, **or** your patch can be applied to the main branch.

|

|

||||||

|

|

||||||

Only then can the build server apply the patch locally and run the builds and

|

|

||||||

tests.

|

|

||||||

|

|

||||||

# Accessing results on Phabricator

|

|

||||||

|

|

||||||

Phabricator will automatically trigger a build for every new patch you upload or

|

|

||||||

modify. Phabricator shows the build results at the top of the entry:

|

|

||||||

|

|

||||||

The CI will compile and run tests, run clang-format and

|

|

||||||

[clang-tidy](docs/clang_tidy.md) on lines changed.

|

|

||||||

|

|

||||||

If a unit test failed, this is shown below the build status. You can also expand

|

|

||||||

the unit test to see the details: .

|

|

||||||

|

|

||||||

# Contributing

|

# Contributing

|

||||||

|

|

||||||

We're happy to get help on improving the infrastructure and workflows!

|

We're happy to get help on improving the infrastructure and workflows!

|

||||||

|

|

||||||

Please check [contibuting](docs/contributing.md) first.

|

Please check [contributing](docs/contributing.md).

|

||||||

|

|

||||||

[Development](docs/development.md) gives an overview how different parts

|

|

||||||

interact together.

|

|

||||||

|

|

||||||

[Playbooks](docs/playbooks.md) shows concrete examples how to, for example,

|

|

||||||

build and run agents locally.

|

|

||||||

|

|

||||||

If you have any questions please contact by [mail](mailto:goncahrov@google.com)

|

|

||||||

or find user "goncharov" on [LLVM Discord](https://discord.gg/xS7Z362).

|

|

||||||

|

|

||||||

# Additional Information

|

|

||||||

|

|

||||||

- [Playbooks](docs/playbooks.md) for installing/upgrading agents and testing

|

|

||||||

changes.

|

|

||||||

|

|

||||||

- [Log of the service

|

|

||||||

operations](https://github.com/google/llvm-premerge-checks/wiki/LLVM-pre-merge-tests-operations-blog)

|

|

||||||

|

|

||||||

# License

|

# License

|

||||||

|

|

||||||

|

|

|

||||||

|

|

@ -1,29 +0,0 @@

|

||||||

# Installation

|

|

||||||

|

|

||||||

Install helm (along with other tools in `local_setup.sh`).

|

|

||||||

|

|

||||||

Once per cluster:

|

|

||||||

|

|

||||||

`helm install arc --namespace "arc-system" --create-namespace oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set-controller`

|

|

||||||

|

|

||||||

## Add new set of runners

|

|

||||||

|

|

||||||

Create runner set first time:

|

|

||||||

|

|

||||||

- copy 'values.yml` and updata parameters: APP ID, target node set, repo etc

|

|

||||||

- run

|

|

||||||

|

|

||||||

```

|

|

||||||

helm install <runner-set> --namespace <k8s-namespace> --create-namespace \

|

|

||||||

--values=$(readlink -f <values.yml>)

|

|

||||||

--set-file=githubConfigSecret.github_app_private_key=$(readlink -f <pem>) \

|

|

||||||

oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set

|

|

||||||

```

|

|

||||||

|

|

||||||

After that update values.yml to use `githubConfigSecret: arc-runner-set-gha-rs-github-secret`.

|

|

||||||

|

|

||||||

## Update

|

|

||||||

|

|

||||||

Example command for linux set:

|

|

||||||

|

|

||||||

`helm upgrade arc-google-linux --namespace arc-linux-prod -f $(readlink -f ~/src/merge-checks/actions-runner-controller/values-llvm.yaml) oci://ghcr.io/actions/actions-runner-controller-charts/gha-runner-scale-set`

|

|

||||||

|

|

@ -1,194 +0,0 @@

|

||||||

# See options doc in https://github.com/actions/actions-runner-controller/tree/master/charts/actions-runner-controller

|

|

||||||

|

|

||||||

## githubConfigUrl is the GitHub url for where you want to configure runners

|

|

||||||

## ex: https://github.com/myorg/myrepo or https://github.com/myorg

|

|

||||||

githubConfigUrl: "https://github.com/llvm/llvm-project"

|

|

||||||

|

|

||||||

## githubConfigSecret is the k8s secrets to use when auth with GitHub API.

|

|

||||||

githubConfigSecret:

|

|

||||||

### GitHub Apps Configuration

|

|

||||||

## NOTE: IDs MUST be strings, use quotes

|

|

||||||

github_app_id: "418336"

|

|

||||||

github_app_installation_id: "43821912"

|

|

||||||

## Pass --set-file=githubConfigSecret.github_app_private_key=<path to pem>

|

|

||||||

# First installation creates this secret.

|

|

||||||

# githubConfigSecret: arc-runner-set-gha-rs-github-secret

|

|

||||||

|

|

||||||

## proxy can be used to define proxy settings that will be used by the

|

|

||||||

## controller, the listener and the runner of this scale set.

|

|

||||||

#

|

|

||||||

# proxy:

|

|

||||||

# http:

|

|

||||||

# url: http://proxy.com:1234

|

|

||||||

# credentialSecretRef: proxy-auth # a secret with `username` and `password` keys

|

|

||||||

# https:

|

|

||||||

# url: http://proxy.com:1234

|

|

||||||

# credentialSecretRef: proxy-auth # a secret with `username` and `password` keys

|

|

||||||

# noProxy:

|

|

||||||

# - example.com

|

|

||||||

# - example.org

|

|

||||||

|

|

||||||

## maxRunners is the max number of runners the autoscaling runner set will scale up to.

|

|

||||||

maxRunners: 3

|

|

||||||

|

|

||||||

## minRunners is the min number of runners the autoscaling runner set will scale down to.

|

|

||||||

minRunners: 1

|

|

||||||

|

|

||||||

runnerGroup: "generic-google-cloud-2"

|

|

||||||

|

|

||||||

## name of the runner scale set to create. Defaults to the helm release name

|

|

||||||

# runnerScaleSetName: ""

|

|

||||||

|

|

||||||

## A self-signed CA certificate for communication with the GitHub server can be

|

|

||||||

## provided using a config map key selector. If `runnerMountPath` is set, for

|

|

||||||

## each runner pod ARC will:

|

|

||||||

## - create a `github-server-tls-cert` volume containing the certificate

|

|

||||||

## specified in `certificateFrom`

|

|

||||||

## - mount that volume on path `runnerMountPath`/{certificate name}

|

|

||||||

## - set NODE_EXTRA_CA_CERTS environment variable to that same path

|

|

||||||

## - set RUNNER_UPDATE_CA_CERTS environment variable to "1" (as of version

|

|

||||||

## 2.303.0 this will instruct the runner to reload certificates on the host)

|

|

||||||

##

|

|

||||||

## If any of the above had already been set by the user in the runner pod

|

|

||||||

## template, ARC will observe those and not overwrite them.

|

|

||||||

## Example configuration:

|

|

||||||

#

|

|

||||||

# githubServerTLS:

|

|

||||||

# certificateFrom:

|

|

||||||

# configMapKeyRef:

|

|

||||||

# name: config-map-name

|

|

||||||

# key: ca.crt

|

|

||||||

# runnerMountPath: /usr/local/share/ca-certificates/

|

|

||||||

|

|

||||||

## Container mode is an object that provides out-of-box configuration

|

|

||||||

## for dind and kubernetes mode. Template will be modified as documented under the

|

|

||||||

## template object.

|

|

||||||

##

|

|

||||||

## If any customization is required for dind or kubernetes mode, containerMode should remain

|

|

||||||

## empty, and configuration should be applied to the template.

|

|

||||||

# containerMode:

|

|

||||||

# type: "dind" ## type can be set to dind or kubernetes

|

|

||||||

# ## the following is required when containerMode.type=kubernetes

|

|

||||||

# kubernetesModeWorkVolumeClaim:

|

|

||||||

# accessModes: ["ReadWriteOnce"]

|

|

||||||

# # For local testing, use https://github.com/openebs/dynamic-localpv-provisioner/blob/develop/docs/quickstart.md to provide dynamic provision volume with storageClassName: openebs-hostpath

|

|

||||||

# storageClassName: "dynamic-blob-storage"

|

|

||||||

# resources:

|

|

||||||

# requests:

|

|

||||||

# storage: 1Gi

|

|

||||||

# kubernetesModeServiceAccount:

|

|

||||||

# annotations:

|

|

||||||

|

|

||||||

## template is the PodSpec for each listener Pod

|

|

||||||

## For reference: https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#PodSpec

|

|

||||||

# listenerTemplate:

|

|

||||||

# spec:

|

|

||||||

# containers:

|

|

||||||

# # Use this section to append additional configuration to the listener container.

|

|

||||||

# # If you change the name of the container, the configuration will not be applied to the listener,

|

|

||||||

# # and it will be treated as a side-car container.

|

|

||||||

# - name: listener

|

|

||||||

# securityContext:

|

|

||||||

# runAsUser: 1000

|

|

||||||

# # Use this section to add the configuration of a side-car container.

|

|

||||||

# # Comment it out or remove it if you don't need it.

|

|

||||||

# # Spec for this container will be applied as is without any modifications.

|

|

||||||

# - name: side-car

|

|

||||||

# image: example-sidecar

|

|

||||||

|

|

||||||

## template is the PodSpec for each runner Pod

|

|

||||||

## For reference: https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#PodSpec

|

|

||||||

## template.spec will be modified if you change the container mode

|

|

||||||

## with containerMode.type=dind, we will populate the template.spec with following pod spec

|

|

||||||

## template:

|

|

||||||

## spec:

|

|

||||||

## initContainers:

|

|

||||||

## - name: init-dind-externals

|

|

||||||

## image: ghcr.io/actions/actions-runner:latest

|

|

||||||

## command: ["cp", "-r", "-v", "/home/runner/externals/.", "/home/runner/tmpDir/"]

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: dind-externals

|

|

||||||

## mountPath: /home/runner/tmpDir

|

|

||||||

## containers:

|

|

||||||

## - name: runner

|

|

||||||

## image: ghcr.io/actions/actions-runner:latest

|

|

||||||

## command: ["/home/runner/run.sh"]

|

|

||||||

## env:

|

|

||||||

## - name: DOCKER_HOST

|

|

||||||

## value: unix:///run/docker/docker.sock

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: work

|

|

||||||

## mountPath: /home/runner/_work

|

|

||||||

## - name: dind-sock

|

|

||||||

## mountPath: /run/docker

|

|

||||||

## readOnly: true

|

|

||||||

## - name: dind

|

|

||||||

## image: docker:dind

|

|

||||||

## args:

|

|

||||||

## - dockerd

|

|

||||||

## - --host=unix:///run/docker/docker.sock

|

|

||||||

## - --group=$(DOCKER_GROUP_GID)

|

|

||||||

## env:

|

|

||||||

## - name: DOCKER_GROUP_GID

|

|

||||||

## value: "123"

|

|

||||||

## securityContext:

|

|

||||||

## privileged: true

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: work

|

|

||||||

## mountPath: /home/runner/_work

|

|

||||||

## - name: dind-sock

|

|

||||||

## mountPath: /run/docker

|

|

||||||

## - name: dind-externals

|

|

||||||

## mountPath: /home/runner/externals

|

|

||||||

## volumes:

|

|

||||||

## - name: work

|

|

||||||

## emptyDir: {}

|

|

||||||

## - name: dind-sock

|

|

||||||

## emptyDir: {}

|

|

||||||

## - name: dind-externals

|

|

||||||

## emptyDir: {}

|

|

||||||

######################################################################################################

|

|

||||||

## with containerMode.type=kubernetes, we will populate the template.spec with following pod spec

|

|

||||||

template:

|

|

||||||

spec:

|

|

||||||

containers:

|

|

||||||

- name: runner

|

|

||||||

image: us-central1-docker.pkg.dev/llvm-premerge-checks/docker/github-linux:latest

|

|

||||||

command: ["/bin/bash"]

|

|

||||||

args: ["-c", "/entrypoint.sh /home/runner/run.sh"]

|

|

||||||

env:

|

|

||||||

- name: ACTIONS_RUNNER_CONTAINER_HOOKS

|

|

||||||

value: /home/runner/k8s/index.js

|

|

||||||

- name: ACTIONS_RUNNER_POD_NAME

|

|

||||||

valueFrom:

|

|

||||||

fieldRef:

|

|

||||||

fieldPath: metadata.name

|

|

||||||

- name: ACTIONS_RUNNER_REQUIRE_JOB_CONTAINER

|

|

||||||

value: "false"

|

|

||||||

- name: WORKDIR

|

|

||||||

value: "/home/runner/_work"

|

|

||||||

resources:

|

|

||||||

limits:

|

|

||||||

cpu: 31

|

|

||||||

memory: 80Gi

|

|

||||||

requests:

|

|

||||||

cpu: 31

|

|

||||||

memory: 80Gi

|

|

||||||

volumeMounts:

|

|

||||||

- name: work

|

|

||||||

mountPath: /home/runner/_work

|

|

||||||

volumes:

|

|

||||||

- name: work

|

|

||||||

emptyDir: {}

|

|

||||||

nodeSelector:

|

|

||||||

cloud.google.com/gke-nodepool: linux-agents-2

|

|

||||||

|

|

||||||

## Optional controller service account that needs to have required Role and RoleBinding

|

|

||||||

## to operate this gha-runner-scale-set installation.

|

|

||||||

## The helm chart will try to find the controller deployment and its service account at installation time.

|

|

||||||

## In case the helm chart can't find the right service account, you can explicitly pass in the following value

|

|

||||||

## to help it finish RoleBinding with the right service account.

|

|

||||||

## Note: if your controller is installed to only watch a single namespace, you have to pass these values explicitly.

|

|

||||||

# controllerServiceAccount:

|

|

||||||

# namespace: arc-system

|

|

||||||

# name: test-arc-gha-runner-scale-set-controller

|

|

||||||

|

|

@ -1,194 +0,0 @@

|

||||||

# See options doc in https://github.com/actions/actions-runner-controller/tree/master/charts/actions-runner-controller

|

|

||||||

|

|

||||||

## githubConfigUrl is the GitHub url for where you want to configure runners

|

|

||||||

## ex: https://github.com/myorg/myrepo or https://github.com/myorg

|

|

||||||

githubConfigUrl: "https://github.com/metafloworg/llvm-project"

|

|

||||||

|

|

||||||

## githubConfigSecret is the k8s secrets to use when auth with GitHub API.

|

|

||||||

#githubConfigSecret:

|

|

||||||

### GitHub Apps Configuration

|

|

||||||

## NOTE: IDs MUST be strings, use quotes

|

|

||||||

#github_app_id: "427039"

|

|

||||||

#github_app_installation_id: "43840704"

|

|

||||||

## Pass --set-file=githubConfigSecret.github_app_private_key=<path to pem>

|

|

||||||

# First installation creates this secret.

|

|

||||||

githubConfigSecret: arc-runner-set-gha-rs-github-secret

|

|

||||||

|

|

||||||

## proxy can be used to define proxy settings that will be used by the

|

|

||||||

## controller, the listener and the runner of this scale set.

|

|

||||||

#

|

|

||||||

# proxy:

|

|

||||||

# http:

|

|

||||||

# url: http://proxy.com:1234

|

|

||||||

# credentialSecretRef: proxy-auth # a secret with `username` and `password` keys

|

|

||||||

# https:

|

|

||||||

# url: http://proxy.com:1234

|

|

||||||

# credentialSecretRef: proxy-auth # a secret with `username` and `password` keys

|

|

||||||

# noProxy:

|

|

||||||

# - example.com

|

|

||||||

# - example.org

|

|

||||||

|

|

||||||

## maxRunners is the max number of runners the autoscaling runner set will scale up to.

|

|

||||||

maxRunners: 3

|

|

||||||

|

|

||||||

## minRunners is the min number of runners the autoscaling runner set will scale down to.

|

|

||||||

minRunners: 0

|

|

||||||

|

|

||||||

# runnerGroup: "default"

|

|

||||||

|

|

||||||

## name of the runner scale set to create. Defaults to the helm release name

|

|

||||||

# runnerScaleSetName: ""

|

|

||||||

|

|

||||||

## A self-signed CA certificate for communication with the GitHub server can be

|

|

||||||

## provided using a config map key selector. If `runnerMountPath` is set, for

|

|

||||||

## each runner pod ARC will:

|

|

||||||

## - create a `github-server-tls-cert` volume containing the certificate

|

|

||||||

## specified in `certificateFrom`

|

|

||||||

## - mount that volume on path `runnerMountPath`/{certificate name}

|

|

||||||

## - set NODE_EXTRA_CA_CERTS environment variable to that same path

|

|

||||||

## - set RUNNER_UPDATE_CA_CERTS environment variable to "1" (as of version

|

|

||||||

## 2.303.0 this will instruct the runner to reload certificates on the host)

|

|

||||||

##

|

|

||||||

## If any of the above had already been set by the user in the runner pod

|

|

||||||

## template, ARC will observe those and not overwrite them.

|

|

||||||

## Example configuration:

|

|

||||||

#

|

|

||||||

# githubServerTLS:

|

|

||||||

# certificateFrom:

|

|

||||||

# configMapKeyRef:

|

|

||||||

# name: config-map-name

|

|

||||||

# key: ca.crt

|

|

||||||

# runnerMountPath: /usr/local/share/ca-certificates/

|

|

||||||

|

|

||||||

## Container mode is an object that provides out-of-box configuration

|

|

||||||

## for dind and kubernetes mode. Template will be modified as documented under the

|

|

||||||

## template object.

|

|

||||||

##

|

|

||||||

## If any customization is required for dind or kubernetes mode, containerMode should remain

|

|

||||||

## empty, and configuration should be applied to the template.

|

|

||||||

# containerMode:

|

|

||||||

# type: "dind" ## type can be set to dind or kubernetes

|

|

||||||

# ## the following is required when containerMode.type=kubernetes

|

|

||||||

# kubernetesModeWorkVolumeClaim:

|

|

||||||

# accessModes: ["ReadWriteOnce"]

|

|

||||||

# # For local testing, use https://github.com/openebs/dynamic-localpv-provisioner/blob/develop/docs/quickstart.md to provide dynamic provision volume with storageClassName: openebs-hostpath

|

|

||||||

# storageClassName: "dynamic-blob-storage"

|

|

||||||

# resources:

|

|

||||||

# requests:

|

|

||||||

# storage: 1Gi

|

|

||||||

# kubernetesModeServiceAccount:

|

|

||||||

# annotations:

|

|

||||||

|

|

||||||

## template is the PodSpec for each listener Pod

|

|

||||||

## For reference: https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#PodSpec

|

|

||||||

# listenerTemplate:

|

|

||||||

# spec:

|

|

||||||

# containers:

|

|

||||||

# # Use this section to append additional configuration to the listener container.

|

|

||||||

# # If you change the name of the container, the configuration will not be applied to the listener,

|

|

||||||

# # and it will be treated as a side-car container.

|

|

||||||

# - name: listener

|

|

||||||

# securityContext:

|

|

||||||

# runAsUser: 1000

|

|

||||||

# # Use this section to add the configuration of a side-car container.

|

|

||||||

# # Comment it out or remove it if you don't need it.

|

|

||||||

# # Spec for this container will be applied as is without any modifications.

|

|

||||||

# - name: side-car

|

|

||||||

# image: example-sidecar

|

|

||||||

|

|

||||||

## template is the PodSpec for each runner Pod

|

|

||||||

## For reference: https://kubernetes.io/docs/reference/kubernetes-api/workload-resources/pod-v1/#PodSpec

|

|

||||||

## template.spec will be modified if you change the container mode

|

|

||||||

## with containerMode.type=dind, we will populate the template.spec with following pod spec

|

|

||||||

## template:

|

|

||||||

## spec:

|

|

||||||

## initContainers:

|

|

||||||

## - name: init-dind-externals

|

|

||||||

## image: ghcr.io/actions/actions-runner:latest

|

|

||||||

## command: ["cp", "-r", "-v", "/home/runner/externals/.", "/home/runner/tmpDir/"]

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: dind-externals

|

|

||||||

## mountPath: /home/runner/tmpDir

|

|

||||||

## containers:

|

|

||||||

## - name: runner

|

|

||||||

## image: ghcr.io/actions/actions-runner:latest

|

|

||||||

## command: ["/home/runner/run.sh"]

|

|

||||||

## env:

|

|

||||||

## - name: DOCKER_HOST

|

|

||||||

## value: unix:///run/docker/docker.sock

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: work

|

|

||||||

## mountPath: /home/runner/_work

|

|

||||||

## - name: dind-sock

|

|

||||||

## mountPath: /run/docker

|

|

||||||

## readOnly: true

|

|

||||||

## - name: dind

|

|

||||||

## image: docker:dind

|

|

||||||

## args:

|

|

||||||

## - dockerd

|

|

||||||

## - --host=unix:///run/docker/docker.sock

|

|

||||||

## - --group=$(DOCKER_GROUP_GID)

|

|

||||||

## env:

|

|

||||||

## - name: DOCKER_GROUP_GID

|

|

||||||

## value: "123"

|

|

||||||

## securityContext:

|

|

||||||

## privileged: true

|

|

||||||

## volumeMounts:

|

|

||||||

## - name: work

|

|

||||||

## mountPath: /home/runner/_work

|

|

||||||

## - name: dind-sock

|

|

||||||

## mountPath: /run/docker

|

|

||||||

## - name: dind-externals

|

|

||||||

## mountPath: /home/runner/externals

|

|

||||||

## volumes:

|

|

||||||

## - name: work

|

|

||||||

## emptyDir: {}

|

|

||||||

## - name: dind-sock

|

|

||||||

## emptyDir: {}

|

|

||||||

## - name: dind-externals

|

|

||||||

## emptyDir: {}

|

|

||||||

######################################################################################################

|

|

||||||

## with containerMode.type=kubernetes, we will populate the template.spec with following pod spec

|

|

||||||

template:

|

|

||||||

spec:

|

|

||||||

containers:

|

|

||||||

- name: runner

|

|

||||||

image: us-central1-docker.pkg.dev/llvm-premerge-checks/docker/github-linux:latest

|

|

||||||

command: ["/bin/bash"]

|

|

||||||

args: ["-c", "/entrypoint.sh /home/runner/run.sh"]

|

|

||||||

env:

|

|

||||||

- name: ACTIONS_RUNNER_CONTAINER_HOOKS

|

|

||||||

value: /home/runner/k8s/index.js

|

|

||||||

- name: ACTIONS_RUNNER_POD_NAME

|

|

||||||

valueFrom:

|

|

||||||

fieldRef:

|

|

||||||

fieldPath: metadata.name

|

|

||||||

- name: ACTIONS_RUNNER_REQUIRE_JOB_CONTAINER

|

|

||||||

value: "false"

|

|

||||||

- name: WORKDIR

|

|

||||||

value: "/home/runner/_work"

|

|

||||||

resources:

|

|

||||||

limits:

|

|

||||||

cpu: 31

|

|

||||||

memory: 80Gi

|

|

||||||

requests:

|

|

||||||

cpu: 31

|

|

||||||

memory: 80Gi

|

|

||||||

volumeMounts:

|

|

||||||

- name: work

|

|

||||||

mountPath: /home/runner/_work

|

|

||||||

volumes:

|

|

||||||

- name: work

|

|

||||||

emptyDir: {}

|

|

||||||

nodeSelector:

|

|

||||||

cloud.google.com/gke-nodepool: linux-agents-2

|

|

||||||

|

|

||||||

## Optional controller service account that needs to have required Role and RoleBinding

|

|

||||||

## to operate this gha-runner-scale-set installation.

|

|

||||||

## The helm chart will try to find the controller deployment and its service account at installation time.

|

|

||||||

## In case the helm chart can't find the right service account, you can explicitly pass in the following value

|

|

||||||

## to help it finish RoleBinding with the right service account.

|

|

||||||

## Note: if your controller is installed to only watch a single namespace, you have to pass these values explicitly.

|

|

||||||

# controllerServiceAccount:

|

|

||||||

# namespace: arc-system

|

|

||||||

# name: test-arc-gha-runner-scale-set-controller

|

|

||||||

|

|

@ -5,9 +5,6 @@ for the merge guards.

|

||||||

## Scripts

|

## Scripts

|

||||||

The scripts are written in bash (for Linux) and powershell (for Windows).

|

The scripts are written in bash (for Linux) and powershell (for Windows).

|

||||||

|

|

||||||

### build_run.(sh|ps1)

|

|

||||||

Build the docker image and run it locally. This is useful for testing it.

|

|

||||||

|

|

||||||

### build_deploy.(sh|ps1)

|

### build_deploy.(sh|ps1)

|

||||||

Build the docker and deploy it to the GCP registry. This is useful for deploying

|

Build the docker and deploy it to the GCP registry. This is useful for deploying

|

||||||

it in the Kubernetes cluster.

|

it in the Kubernetes cluster.

|

||||||

|

|

|

||||||

|

|

@ -1 +0,0 @@

|

||||||

cloudbuild.yaml

|

|

||||||

|

|

@ -1,80 +0,0 @@

|

||||||

FROM debian:stable

|

|

||||||

|

|

||||||

RUN echo 'intall packages'; \

|

|

||||||

apt-get update; \

|

|

||||||

apt-get install -y --no-install-recommends \

|

|

||||||

locales openssh-client gnupg ca-certificates \

|

|

||||||

zip wget git \

|

|

||||||

gdb build-essential \

|

|

||||||

ninja-build \

|

|

||||||

libelf-dev libffi-dev gcc-multilib \

|

|

||||||

# for llvm-libc tests that build mpfr and gmp from source

|

|

||||||

autoconf automake libtool \

|

|

||||||

ccache \

|

|

||||||

python3 python3-psutil \

|

|

||||||

python3-pip python3-setuptools \

|

|

||||||

lsb-release software-properties-common \

|

|

||||||

swig python3-dev libedit-dev libncurses5-dev libxml2-dev liblzma-dev golang rsync jq \

|

|

||||||

# for llvm installation script

|

|

||||||

sudo;

|

|

||||||

|

|

||||||

# debian stable cmake is 3.18, we need to install a more recent version.

|

|

||||||

RUN wget --no-verbose -O /cmake.sh https://github.com/Kitware/CMake/releases/download/v3.23.3/cmake-3.23.3-linux-x86_64.sh; \

|

|

||||||

chmod +x /cmake.sh; \

|

|

||||||

mkdir -p /etc/cmake; \

|

|

||||||

/cmake.sh --prefix=/etc/cmake --skip-license; \

|

|

||||||

ln -s /etc/cmake/bin/cmake /usr/bin/cmake; \

|

|

||||||

cmake --version; \

|

|

||||||

rm /cmake.sh

|

|

||||||

|

|

||||||

# LLVM must be installed after prerequsite packages.

|

|

||||||

ENV LLVM_VERSION=16

|

|

||||||

RUN echo 'install llvm ${LLVM_VERSION}'; \

|

|

||||||

wget --no-verbose https://apt.llvm.org/llvm.sh; \

|

|

||||||

chmod +x llvm.sh; \

|

|

||||||

./llvm.sh ${LLVM_VERSION};\

|

|

||||||

apt-get update; \

|

|

||||||

apt install -y clang-${LLVM_VERSION} clang-format-${LLVM_VERSION} clang-tidy-${LLVM_VERSION} lld-${LLVM_VERSION}; \

|

|

||||||

ln -s /usr/bin/clang-${LLVM_VERSION} /usr/bin/clang;\

|

|

||||||

ln -s /usr/bin/clang++-${LLVM_VERSION} /usr/bin/clang++;\

|

|

||||||

ln -s /usr/bin/clang-tidy-${LLVM_VERSION} /usr/bin/clang-tidy;\

|

|

||||||

ln -s /usr/bin/clang-tidy-diff-${LLVM_VERSION}.py /usr/bin/clang-tidy-diff;\

|

|

||||||

ln -s /usr/bin/clang-format-${LLVM_VERSION} /usr/bin/clang-format;\

|

|

||||||

ln -s /usr/bin/clang-format-diff-${LLVM_VERSION} /usr/bin/clang-format-diff;\

|

|

||||||

ln -s /usr/bin/lld-${LLVM_VERSION} /usr/bin/lld;\

|

|

||||||

ln -s /usr/bin/lldb-${LLVM_VERSION} /usr/bin/lldb;\

|

|

||||||

ln -s /usr/bin/ld.lld-${LLVM_VERSION} /usr/bin/ld.lld

|

|

||||||

|

|

||||||

RUN echo 'configure locale'; \

|

|

||||||

sed --in-place '/en_US.UTF-8/s/^#//' /etc/locale.gen ;\

|

|

||||||

locale-gen ;\

|

|

||||||

echo 'make python 3 default'; \

|

|

||||||

rm -f /usr/bin/python && ln -s /usr/bin/python3 /usr/bin/python; \

|

|

||||||

pip3 install wheel

|

|

||||||

|

|

||||||

# Configure locale

|

|

||||||

ENV LANG en_US.UTF-8

|

|

||||||

ENV LANGUAGE en_US:en

|

|

||||||

ENV LC_ALL en_US.UTF-8

|

|

||||||

|

|

||||||

RUN wget --no-verbose -O /usr/bin/bazelisk https://github.com/bazelbuild/bazelisk/releases/download/v1.17.0/bazelisk-linux-amd64; \

|

|

||||||

chmod +x /usr/bin/bazelisk; \

|

|

||||||

bazelisk --version

|

|

||||||

|

|

||||||

RUN echo 'install buildkite' ;\

|

|

||||||

apt-get install -y apt-transport-https gnupg;\

|

|

||||||

sh -c 'echo deb https://apt.buildkite.com/buildkite-agent stable main > /etc/apt/sources.list.d/buildkite-agent.list' ;\

|

|

||||||

apt-key adv --keyserver hkp://keyserver.ubuntu.com:80 --recv-keys 32A37959C2FA5C3C99EFBC32A79206696452D198 ;\

|

|

||||||

apt-get update ;\

|

|

||||||

apt-get install -y buildkite-agent tini gosu; \

|

|

||||||

apt-get clean;

|

|

||||||

COPY *.sh /usr/local/bin/

|

|

||||||

RUN chmod og+rx /usr/local/bin/*.sh

|

|

||||||

COPY --chown=buildkite-agent:buildkite-agent pre-checkout /etc/buildkite-agent/hooks

|

|

||||||

COPY --chown=buildkite-agent:buildkite-agent post-checkout /etc/buildkite-agent/hooks

|

|

||||||

|

|

||||||

# buildkite working directory

|

|

||||||

VOLUME /var/lib/buildkite-agent

|

|

||||||

|

|

||||||

ENTRYPOINT ["entrypoint.sh"]

|

|

||||||

CMD ["gosu", "buildkite-agent", "buildkite-agent", "start", "--no-color"]

|

|

||||||

|

|

@ -1,39 +0,0 @@

|

||||||

#!/usr/bin/env bash

|

|

||||||

|

|

||||||

# Copyright 2021 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

set -eo pipefail

|

|

||||||

|

|

||||||

USER=buildkite-agent

|

|

||||||

P="${BUILDKITE_BUILD_PATH:-/var/lib/buildkite-agent}"

|

|

||||||

set -u

|

|

||||||

mkdir -p "$P"

|

|

||||||

chown -R ${USER}:${USER} "$P"

|

|

||||||

|

|

||||||

export CCACHE_DIR="${P}"/ccache

|

|

||||||

export CCACHE_MAXSIZE=20G

|

|

||||||

mkdir -p "${CCACHE_DIR}"

|

|

||||||

chown -R ${USER}:${USER} "${CCACHE_DIR}"

|

|

||||||

|

|

||||||

# /mnt/ssh should contain known_hosts, id_rsa and id_rsa.pub .

|

|

||||||

mkdir -p /var/lib/buildkite-agent/.ssh

|

|

||||||

if [ -d /mnt/ssh ]; then

|

|

||||||

cp /mnt/ssh/* /var/lib/buildkite-agent/.ssh || echo "no "

|

|

||||||

chmod 700 /var/lib/buildkite-agent/.ssh

|

|

||||||

chmod 600 /var/lib/buildkite-agent/.ssh/*

|

|

||||||

chown -R buildkite-agent:buildkite-agent /var/lib/buildkite-agent/.ssh/

|

|

||||||

else

|

|

||||||

echo "/mnt/ssh is not mounted"

|

|

||||||

fi

|

|

||||||

exec /usr/bin/tini -g -- $@

|

|

||||||

|

|

@ -1,26 +0,0 @@

|

||||||

# Copyright 2021 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

|

|

||||||

# The `post-checkout` hook runs after checkout.

|

|

||||||

|

|

||||||

set -eu

|

|

||||||

|

|

||||||

# Run prune as git will start to complain "warning: There are too many unreachable loose objects; run 'git prune' to remove them.".

|

|

||||||

# That happens as we routinely drop old branches.

|

|

||||||

git prune

|

|

||||||

if [ -f ".git/gc.log" ]; then

|

|

||||||

echo ".git/gc.log exist"

|

|

||||||

cat ./.git/gc.log

|

|

||||||

rm -f ./.git/gc.log

|

|

||||||

fi

|

|

||||||

|

|

@ -1,23 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

# Copyright 2020 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

|

|

||||||

# The `pre-checkout` hook will run just before your pipelines source code is

|

|

||||||

# checked out from your SCM provider

|

|

||||||

|

|

||||||

set -e

|

|

||||||

|

|

||||||

# Convert https://github.com/llvm-premerge-tests/llvm-project.git -> llvm-project

|

|

||||||

# to use the same directory for fork and origin.

|

|

||||||

BUILDKITE_BUILD_CHECKOUT_PATH="${BUILDKITE_BUILD_PATH}/$(echo $BUILDKITE_REPO | sed -E "s#.*/([^/]*)#\1#" | sed "s/.git$//")"

|

|

||||||

|

|

@ -1,46 +0,0 @@

|

||||||

# Copyright 2019 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

|

|

||||||

# define command line arguments

|

|

||||||

param(

|

|

||||||

[Parameter(Mandatory=$true)][string]$IMAGE_NAME,

|

|

||||||

[Parameter(Mandatory=$false)][string]$CMD="",

|

|

||||||

[string]$token

|

|

||||||

)

|

|

||||||

|

|

||||||

# set script to stop on first error

|

|

||||||

$ErrorActionPreference = "Stop"

|

|

||||||

|

|

||||||

# some docs recommend setting 2GB memory limit

|

|

||||||

docker build `

|

|

||||||

--memory 2GB `

|

|

||||||

-t $IMAGE_NAME `

|

|

||||||

--build-arg token=$token `

|

|

||||||

"$PSScriptRoot\$IMAGE_NAME"

|

|

||||||

If ($LastExitCode -ne 0) {

|

|

||||||

exit

|

|

||||||

}

|

|

||||||

|

|

||||||

$DIGEST=$(docker image inspect --format "{{range .RepoDigests}}{{.}}{{end}}" $IMAGE_NAME) -replace ".*@sha256:(.{6})(.*)$","`$1"

|

|

||||||

# mount a persistent workspace for experiments

|

|

||||||

docker run -it `

|

|

||||||

-v C:/ws:C:/ws `

|

|

||||||

-v C:/credentials:C:/credentials `

|

|

||||||

-e BUILDKITE_BUILD_PATH=C:\ws `

|

|

||||||

-e IMAGE_DIGEST=${DIGEST} `

|

|

||||||

-e PARENT_HOSTNAME=$env:computername `

|

|

||||||

$IMAGE_NAME $CMD

|

|

||||||

If ($LastExitCode -ne 0) {

|

|

||||||

exit

|

|

||||||

}

|

|

||||||

|

|

@ -1,26 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

# Copyright 2019 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

|

|

||||||

# Starts a new instances of a docker image. Example:

|

|

||||||

# sudo build_run.sh buildkite-premerge-debian /bin/bash

|

|

||||||

|

|

||||||

set -eux

|

|

||||||

DIR="$( cd "$( dirname "${BASH_SOURCE[0]}" )" >/dev/null 2>&1 && pwd )"

|

|

||||||

|

|

||||||

IMAGE_NAME="${1%/}"

|

|

||||||

|

|

||||||

cd "${DIR}/${IMAGE_NAME}"

|

|

||||||

docker build -t ${IMAGE_NAME} .

|

|

||||||

docker run -i -t -v ~/.llvm-premerge-checks:/credentials -v ${DIR}/workspace:/workspace ${IMAGE_NAME} ${2}

|

|

||||||

|

|

@ -1,5 +1,3 @@

|

||||||

# TODO: remove after migrating scripts to LLVM repo.

|

|

||||||

git clone https://github.com/google/llvm-premerge-checks.git c:\llvm-premerge-checks

|

|

||||||

# Checkout path is set to a fixed short value (e.g. c:\ws\src) to keep paths

|

# Checkout path is set to a fixed short value (e.g. c:\ws\src) to keep paths

|

||||||

# short as many tools break on Windows with paths longer than 250.

|

# short as many tools break on Windows with paths longer than 250.

|

||||||

if ($null -eq $env:BUILDKITE_BUILD_PATH) { $env:BUILDKITE_BUILD_PATH = 'c:\ws' }

|

if ($null -eq $env:BUILDKITE_BUILD_PATH) { $env:BUILDKITE_BUILD_PATH = 'c:\ws' }

|

||||||

|

|

|

||||||

|

|

@ -1,105 +0,0 @@

|

||||||

FROM ubuntu:latest

|

|

||||||

|

|

||||||

RUN echo 'intall packages'; \

|

|

||||||

apt-get update; \

|

|

||||||

apt-get upgrade; \

|

|

||||||

apt-get install -y --no-install-recommends \

|

|

||||||

gosu \

|

|

||||||

locales openssh-client gnupg ca-certificates \

|

|

||||||

zip unzip wget curl git \

|

|

||||||

gdb build-essential \

|

|

||||||

ninja-build \

|

|

||||||

libelf-dev libffi-dev gcc-multilib libmpfr-dev libpfm4-dev \

|

|

||||||

# for llvm-libc tests that build mpfr and gmp from source

|

|

||||||

autoconf automake libtool \

|

|

||||||

python3 python3-psutil python3-pip python3-setuptools \

|

|

||||||

lsb-release software-properties-common \

|

|

||||||

swig python3-dev libedit-dev libncurses5-dev libxml2-dev liblzma-dev golang rsync jq \

|

|

||||||

# for llvm installation script

|

|

||||||

sudo \

|

|

||||||

# build scripts

|

|

||||||

nodejs \

|

|

||||||

# shell users

|

|

||||||

less vim

|

|

||||||

|

|

||||||

# debian stable cmake is 3.18, we need to install a more recent version.

|

|

||||||

RUN wget --no-verbose -O /cmake.sh https://github.com/Kitware/CMake/releases/download/v3.23.3/cmake-3.23.3-linux-x86_64.sh; \

|

|

||||||

chmod +x /cmake.sh; \

|

|

||||||

mkdir -p /etc/cmake; \

|

|

||||||

/cmake.sh --prefix=/etc/cmake --skip-license; \

|

|

||||||

ln -s /etc/cmake/bin/cmake /usr/bin/cmake; \

|

|

||||||

cmake --version; \

|

|

||||||

rm /cmake.sh

|

|

||||||

|

|

||||||

# LLVM must be installed after prerequsite packages.

|

|

||||||

ENV LLVM_VERSION=16

|

|

||||||

RUN echo 'install llvm ${LLVM_VERSION}' && \

|

|

||||||

wget --no-verbose https://apt.llvm.org/llvm.sh && \

|

|

||||||

chmod +x llvm.sh && \

|

|

||||||

./llvm.sh ${LLVM_VERSION} && \

|

|

||||||

apt-get update && \

|

|

||||||

apt-get install -y clang-${LLVM_VERSION} clang-format-${LLVM_VERSION} clang-tidy-${LLVM_VERSION} lld-${LLVM_VERSION} && \

|

|

||||||

ln -s /usr/bin/clang-${LLVM_VERSION} /usr/bin/clang && \

|

|

||||||

ln -s /usr/bin/clang++-${LLVM_VERSION} /usr/bin/clang++ && \

|

|

||||||

ln -s /usr/bin/clang-tidy-${LLVM_VERSION} /usr/bin/clang-tidy && \

|

|

||||||

ln -s /usr/bin/clang-tidy-diff-${LLVM_VERSION}.py /usr/bin/clang-tidy-diff && \

|

|

||||||

ln -s /usr/bin/clang-format-${LLVM_VERSION} /usr/bin/clang-format && \

|

|

||||||

ln -s /usr/bin/clang-format-diff-${LLVM_VERSION} /usr/bin/clang-format-diff && \

|

|

||||||

ln -s /usr/bin/lld-${LLVM_VERSION} /usr/bin/lld && \

|

|

||||||

ln -s /usr/bin/lldb-${LLVM_VERSION} /usr/bin/lldb && \

|

|

||||||

ln -s /usr/bin/ld.lld-${LLVM_VERSION} /usr/bin/ld.lld && \

|

|

||||||

clang --version

|

|

||||||

|

|

||||||

RUN echo 'configure locale' && \

|

|

||||||

sed --in-place '/en_US.UTF-8/s/^#//' /etc/locale.gen && \

|

|

||||||

locale-gen

|

|

||||||

ENV LANG en_US.UTF-8

|

|

||||||

ENV LANGUAGE en_US:en

|

|

||||||

ENV LC_ALL en_US.UTF-8

|

|

||||||

|

|

||||||

RUN curl -o sccache-v0.5.4-x86_64-unknown-linux-musl.tar.gz -L https://github.com/mozilla/sccache/releases/download/v0.5.4/sccache-v0.5.4-x86_64-unknown-linux-musl.tar.gz \

|

|

||||||

&& echo "4bf3ce366aa02599019093584a5cbad4df783f8d6e3610548c2044daa595d40b sccache-v0.5.4-x86_64-unknown-linux-musl.tar.gz" | shasum -a 256 -c \

|

|

||||||

&& tar xzf ./sccache-v0.5.4-x86_64-unknown-linux-musl.tar.gz \

|

|

||||||

&& mv sccache-v0.5.4-x86_64-unknown-linux-musl/sccache /usr/bin \

|

|

||||||

&& chown root:root /usr/bin/sccache \

|

|

||||||

&& ls -la /usr/bin/sccache \

|

|

||||||

&& sccache --version

|

|

||||||

|

|

||||||

RUN groupadd -g 121 runner \

|

|

||||||

&& useradd -mr -d /home/runner -u 1001 -g 121 runner \

|

|

||||||

&& mkdir -p /home/runner/_work

|

|

||||||

|

|

||||||

WORKDIR /home/runner

|

|

||||||

|

|

||||||

# https://github.com/actions/runner/releases

|

|

||||||

ARG RUNNER_VERSION="2.311.0"

|

|

||||||

ARG RUNNER_ARCH="x64"

|

|

||||||

|

|

||||||

RUN curl -f -L -o runner.tar.gz https://github.com/actions/runner/releases/download/v${RUNNER_VERSION}/actions-runner-linux-${RUNNER_ARCH}-${RUNNER_VERSION}.tar.gz \

|

|

||||||

&& tar xzf ./runner.tar.gz \

|

|

||||||

&& rm runner.tar.gz

|

|

||||||

|

|

||||||

# https://github.com/actions/runner-container-hooks/releases

|

|

||||||

ARG RUNNER_CONTAINER_HOOKS_VERSION="0.4.0"

|

|

||||||

RUN curl -f -L -o runner-container-hooks.zip https://github.com/actions/runner-container-hooks/releases/download/v${RUNNER_CONTAINER_HOOKS_VERSION}/actions-runner-hooks-k8s-${RUNNER_CONTAINER_HOOKS_VERSION}.zip \

|

|

||||||

&& unzip ./runner-container-hooks.zip -d ./k8s \

|

|

||||||

&& rm runner-container-hooks.zip

|

|

||||||

|

|

||||||

RUN chown -R runner:runner /home/runner

|

|

||||||

|

|

||||||

# Copy entrypoint but script. There is no point of adding

|

|

||||||

# `ENTRYPOINT ["/entrypoint.sh"]` as k8s runs it with explicit command and

|

|

||||||

# entrypoint is ignored.

|

|

||||||

COPY entrypoint.sh /entrypoint.sh

|

|

||||||

RUN chmod +x /entrypoint.sh

|

|

||||||

# USER runner

|

|

||||||

|

|

||||||

# We don't need entry point and token logic as it's all orchestrated by action runner controller.

|

|

||||||

# BUT we need to start server etc

|

|

||||||

|

|

||||||

|

|

||||||

# RUN chmod +x /entrypoint.sh /token.sh

|

|

||||||

# # try: USER runner instead of gosu

|

|

||||||

# ENTRYPOINT ["/entrypoint.sh"]

|

|

||||||

# CMD ["sleep", "infinity"]

|

|

||||||

# CMD ["./bin/Runner.Listener", "run", "--startuptype", "service"]

|

|

||||||

|

|

@ -1,5 +0,0 @@

|

||||||

steps:

|

|

||||||

- name: 'gcr.io/cloud-builders/docker'

|

|

||||||

args: [ 'build', '-t', 'us-central1-docker.pkg.dev/llvm-premerge-checks/docker/github-linux', '.' ]

|

|

||||||

images:

|

|

||||||

- 'us-central1-docker.pkg.dev/llvm-premerge-checks/docker/github-linux:latest'

|

|

||||||

|

|

@ -1,33 +0,0 @@

|

||||||

#!/usr/bin/env bash

|

|

||||||

|

|

||||||

# Copyright 2023 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

set -ueo pipefail

|

|

||||||

|

|

||||||

export PATH=${PATH}:/home/runner

|

|

||||||

|

|

||||||

USER=runner

|

|

||||||

WORKDIR=${WORKDIR:-/home/runner/_work}

|

|

||||||

|

|

||||||

export SCCACHE_DIR="${WORKDIR}/sccache"

|

|

||||||

mkdir -p "${SCCACHE_DIR}"

|

|

||||||

chown -R ${USER}:${USER} "${SCCACHE_DIR}"

|

|

||||||

chmod oug+rw "${SCCACHE_DIR}"

|

|

||||||

gosu runner bash -c 'SCCACHE_DIR="${SCCACHE_DIR}" SCCACHE_IDLE_TIMEOUT=0 SCCACHE_CACHE_SIZE=20G sccache --start-server'

|

|

||||||

sccache --show-stats

|

|

||||||

|

|

||||||

[[ ! -d "${WORKDIR}" ]] && mkdir -p "${WORKDIR}"

|

|

||||||

|

|

||||||

# exec /usr/bin/tini -g -- $@

|

|

||||||

gosu runner "$@"

|

|

||||||

|

|

@ -1,50 +0,0 @@

|

||||||

#!/bin/bash

|

|

||||||

|

|

||||||

# https://github.com/myoung34/docker-github-actions-runner/blob/master/token.sh

|

|

||||||

# Licensed under MIT

|

|

||||||

# https://github.com/myoung34/docker-github-actions-runner/blob/master/LICENSE

|

|

||||||

|

|

||||||

set -euo pipefail

|

|

||||||

|

|

||||||

_GITHUB_HOST=${GITHUB_HOST:="github.com"}

|

|

||||||

|

|

||||||

# If URL is not github.com then use the enterprise api endpoint

|

|

||||||

if [[ ${GITHUB_HOST} = "github.com" ]]; then

|

|

||||||

URI="https://api.${_GITHUB_HOST}"

|

|

||||||

else

|

|

||||||

URI="https://${_GITHUB_HOST}/api/v3"

|

|

||||||

fi

|

|

||||||

|

|

||||||

API_VERSION=v3

|

|

||||||

API_HEADER="Accept: application/vnd.github.${API_VERSION}+json"

|

|

||||||

AUTH_HEADER="Authorization: token ${ACCESS_TOKEN}"

|

|

||||||

CONTENT_LENGTH_HEADER="Content-Length: 0"

|

|

||||||

|

|

||||||

case ${RUNNER_SCOPE} in

|

|

||||||

org*)

|

|

||||||

_FULL_URL="${URI}/orgs/${ORG_NAME}/actions/runners/registration-token"

|

|

||||||

;;

|

|

||||||

|

|

||||||

ent*)

|

|

||||||

_FULL_URL="${URI}/enterprises/${ENTERPRISE_NAME}/actions/runners/registration-token"

|

|

||||||

;;

|

|

||||||

|

|

||||||

*)

|

|

||||||

_PROTO="https://"

|

|

||||||

# shellcheck disable=SC2116

|

|

||||||

_URL="$(echo "${REPO_URL/${_PROTO}/}")"

|

|

||||||

_PATH="$(echo "${_URL}" | grep / | cut -d/ -f2-)"

|

|

||||||

_ACCOUNT="$(echo "${_PATH}" | cut -d/ -f1)"

|

|

||||||

_REPO="$(echo "${_PATH}" | cut -d/ -f2)"

|

|

||||||

_FULL_URL="${URI}/repos/${_ACCOUNT}/${_REPO}/actions/runners/registration-token"

|

|

||||||

;;

|

|

||||||

esac

|

|

||||||

|

|

||||||

RUNNER_TOKEN="$(curl -XPOST -fsSL \

|

|

||||||

-H "${CONTENT_LENGTH_HEADER}" \

|

|

||||||

-H "${AUTH_HEADER}" \

|

|

||||||

-H "${API_HEADER}" \

|

|

||||||

"${_FULL_URL}" \

|

|

||||||

| jq -r '.token')"

|

|

||||||

|

|

||||||

echo "{\"token\": \"${RUNNER_TOKEN}\", \"full_url\": \"${_FULL_URL}\"}"

|

|

||||||

|

|

@ -1,32 +0,0 @@

|

||||||

FROM ubuntu:latest

|

|

||||||

|

|

||||||

RUN apt-get update ;\

|

|

||||||

apt-get upgrade -y;\

|

|

||||||

apt-get install -y --no-install-recommends \

|

|

||||||

locales openssh-client gnupg ca-certificates \

|

|

||||||

build-essential \

|

|

||||||

zip wget git less vim \

|

|

||||||

python3 python3-psutil python3-pip python3-setuptools pipenv \

|

|

||||||

# PostgreSQL

|

|

||||||

libpq-dev \

|

|

||||||

# debugging

|

|

||||||

less vim wget curl \

|

|

||||||

rsync jq tini gosu;

|

|

||||||

|

|

||||||

RUN echo 'configure locale' && \

|

|

||||||

sed --in-place '/en_US.UTF-8/s/^#//' /etc/locale.gen && \

|

|

||||||

locale-gen

|

|

||||||

ENV LANG en_US.UTF-8

|

|

||||||

ENV LANGUAGE en_US:en

|

|

||||||

ENV LC_ALL en_US.UTF-8

|

|

||||||

|

|

||||||

COPY *.sh /usr/local/bin/

|

|

||||||

# requirements.txt generated by running `pipenv lock -r > ../../containers/stats/requirements.txt` in `scripts/metrics`.

|

|

||||||

# COPY requirements.txt /tmp/

|

|

||||||

# RUN pip install -q -r /tmp/requirements.txt

|

|

||||||

RUN chmod og+rx /usr/local/bin/*.sh

|

|

||||||

RUN wget -q https://dl.google.com/cloudsql/cloud_sql_proxy.linux.amd64 -O /usr/local/bin/cloud_sql_proxy;\

|

|

||||||

chmod +x /usr/local/bin/cloud_sql_proxy

|

|

||||||

|

|

||||||

ENTRYPOINT ["entrypoint.sh"]

|

|

||||||

CMD ["/bin/bash"]

|

|

||||||

|

|

@ -1,22 +0,0 @@

|

||||||

#!/usr/bin/env bash

|

|

||||||

|

|

||||||

# Copyright 2021 Google LLC

|

|

||||||

#

|

|

||||||

# Licensed under the the Apache License v2.0 with LLVM Exceptions (the "License");

|

|

||||||

# you may not use this file except in compliance with the License.

|

|

||||||

# You may obtain a copy of the License at

|

|

||||||

#

|

|

||||||

# https://llvm.org/LICENSE.txt

|

|

||||||

#

|

|

||||||

# Unless required by applicable law or agreed to in writing, software

|

|

||||||

# distributed under the License is distributed on an "AS IS" BASIS,

|

|

||||||

# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

|

|

||||||

# See the License for the specific language governing permissions and

|

|

||||||

# limitations under the License.

|

|

||||||

set -euo pipefail

|

|

||||||

|

|

||||||

git clone --depth 1 https://github.com/google/llvm-premerge-checks.git ~/llvm-premerge-checks

|

|

||||||

cd ~/llvm-premerge-checks

|

|

||||||

git fetch origin "${SCRIPTS_REFSPEC:=main}":x

|

|

||||||

git checkout x

|

|

||||||

exec /usr/bin/tini -g -- $@

|

|

||||||

|

|

@ -1,27 +0,0 @@

|

||||||

backoff==1.10.0

|

|

||||||

certifi==2023.7.22

|

|

||||||

chardet==4.0.0

|

|

||||||

ftfy==6.0.1; python_version >= '3.6'

|

|

||||||

gitdb==4.0.7

|

|

||||||

gitpython==3.1.41

|

|

||||||

idna==2.10

|

|

||||||

lxml==4.9.1

|

|

||||||

mailchecker==4.0.7

|

|

||||||

pathspec==0.8.1

|

|

||||||

phabricator==0.8.1

|

|

||||||

phonenumbers==8.12.23

|

|

||||||

psycopg2-binary==2.8.6

|

|

||||||

pyaml==20.4.0

|

|

||||||

python-benedict==0.24.0

|

|

||||||

python-dateutil==2.8.1

|

|

||||||

python-fsutil==0.5.0

|

|

||||||

python-slugify==5.0.2

|

|

||||||

pyyaml==5.4.1

|

|

||||||

requests==2.31.0

|

|

||||||

six==1.16.0

|

|

||||||

smmap==4.0.0

|

|

||||||

text-unidecode==1.3

|

|

||||||

toml==0.10.2

|

|

||||||

urllib3==1.26.18

|

|

||||||

wcwidth==0.2.5

|

|

||||||

xmltodict==0.12.0

|

|

||||||

|

|

@ -1,29 +0,0 @@

|

||||||

# clang-tidy checks

|

|

||||||

## Warning is not useful

|

|